Find the Optimal Parameters of a Classifier

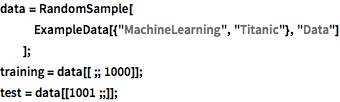

Load a dataset and split it into a training set and a test set.

In[1]:=

data = RandomSample[

ExampleData[{"MachineLearning", "Titanic"}, "Data"]

];

training = data[[;; 1000]];

test = data[[1001 ;;]];Define a function computing the performance of a classifier as a function of its (hyper) parameters.

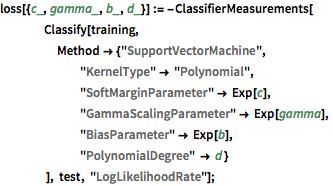

In[2]:=

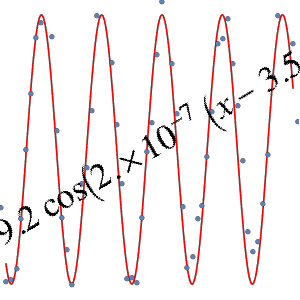

loss[{c_, gamma_, b_, d_}] := -ClassifierMeasurements[

Classify[training,

Method -> {"SupportVectorMachine",

"KernelType" -> "Polynomial",

"SoftMarginParameter" -> Exp[c],

"GammaScalingParameter" -> Exp[gamma],

"BiasParameter" -> Exp[b],

"PolynomialDegree" -> d }

], test, "LogLikelihoodRate"];Define the possible value of the parameters.

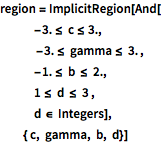

In[3]:=

region = ImplicitRegion[And[

-3. <= c <= 3.,

-3. <= gamma <= 3. ,

-1. <= b <= 2.,

1 <= d <= 3 ,

d \[Element] Integers],

{ c, gamma, b, d}]Out[3]=

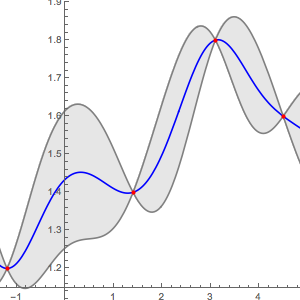

Search for a good set of parameters.

In[4]:=

bmo = BayesianMinimization[loss, region]Out[4]=

In[5]:=

bmo["MinimumConfiguration"]Out[5]=

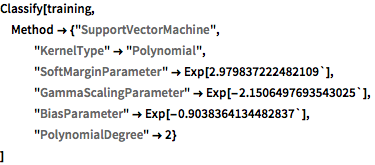

Train a classifier with these parameters.

In[6]:=

Classify[training,

Method -> {"SupportVectorMachine",

"KernelType" -> "Polynomial",

"SoftMarginParameter" -> Exp[2.979837222482109`],

"GammaScalingParameter" -> Exp[-2.1506497693543025`],

"BiasParameter" -> Exp[-0.9038364134482837`],

"PolynomialDegree" -> 2}

]Out[6]=