Prevent Overfitting Automatically

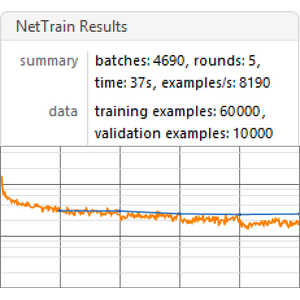

Overfitting is the failure of a model to generalize to data outside of the training set. One way to prevent overfitting is to monitor the performance of a model on a held-out validation dataset and to stop training if the performance on the validation set stops improving. This example demonstrates how the TrainingStoppingCriterion option for NetTrain allows you to specify a criterion by which to determine whether or not a net is improving and thereby prevent overfitting.

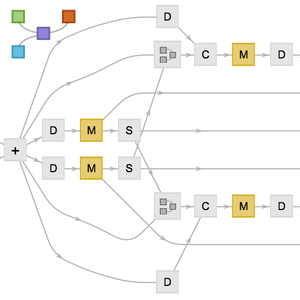

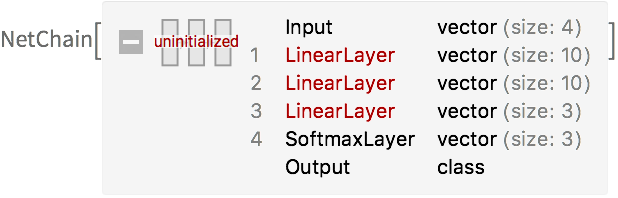

Create a simple net.

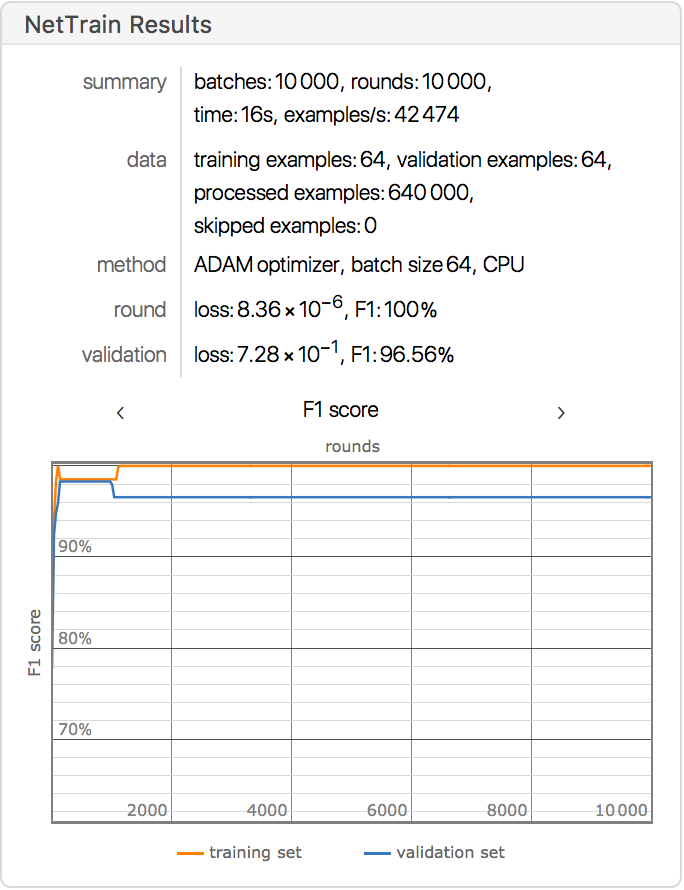

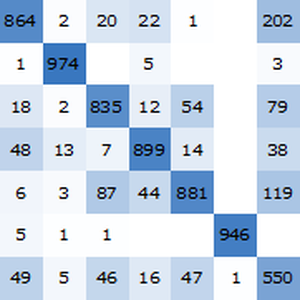

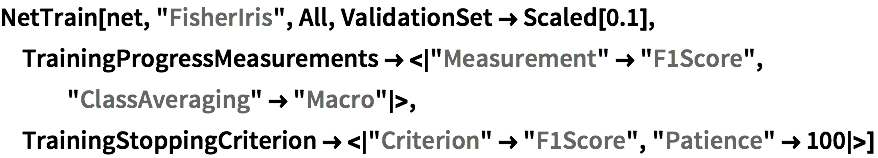

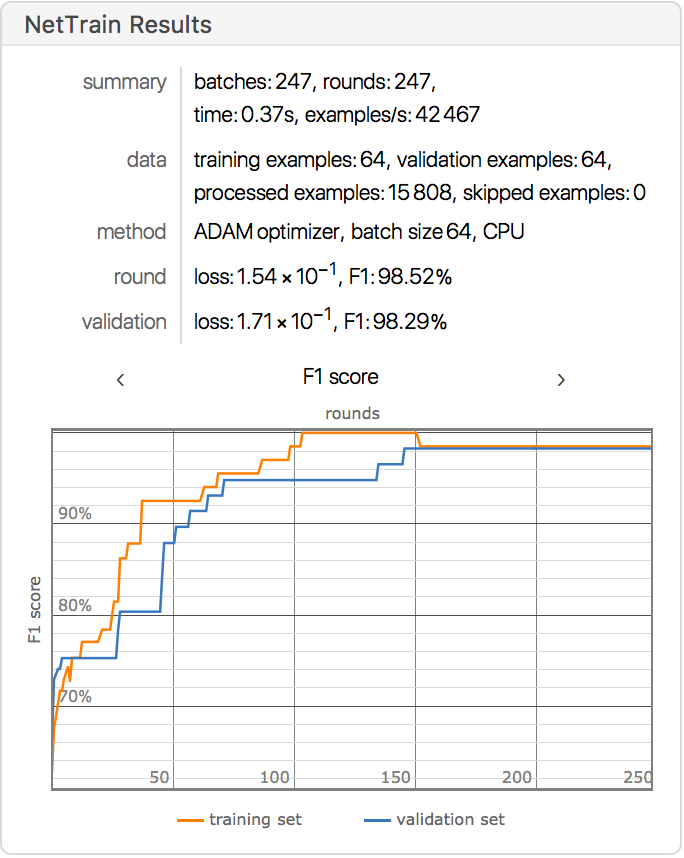

Train the net on the Iris dataset, stopping if the validation F1 score does not improve for more than 100 rounds.

Compare this to training the same net without early stopping; you get a similar F1 score but you train for much longer. Notice that this example has overfit to the training set: the validation F1 score has decreased.