Build Neural Nets for Any Language

In English, it is often efficient to tokenize text into words as a first step of a natural language processing application: words are good semantic units, and they are easily identified thanks to spaces and punctuation. In some other languages, word tokenization can be harder to perform (e.g. in Chinese) or creates too-complex semantic units (e.g. in compound words). The byte-pair-encoding (BPE) sub-word tokenization is an efficient alternative that can be applied to any language. This example demonstrates how to use a parametrized BPE embedding model as a starting point to create a neural net for a given language.

Obtain information about the parametrized BPE embeddings available in the Wolfram Neural Net Repository.

Load a model with non-default parameters.

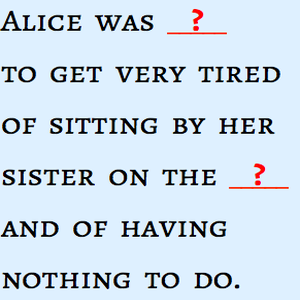

Apply the embedding layer to a sentence to return a sequence of embedding vectors (one vector for each sub-word token).

Extract the BPE tokenization part of the layer.

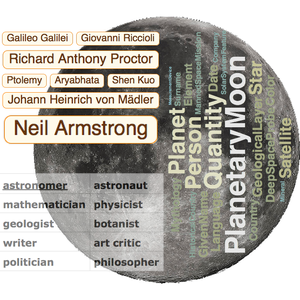

Visualize the tokenization of the sentence.

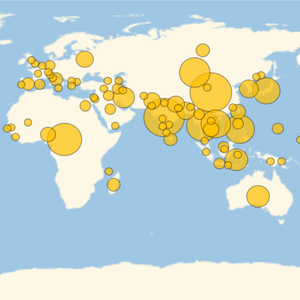

Visualize the tokenization for other languages.