Represent Word Semantics in Context

The Wolfram Neural Net Repository contains many models to represent words by numeric vectors. These semantic vectors are a key element to solve language applications. This example exhibits an embedding model that takes into account the context of each word and illustrates how it achieves word-sense disambiguation.

The ELMo neural net learned contextual word representations after being trained on about one billion words.

By default, ELMo produces three vectors per word (one that is not contextual and two contextual levels). The net can be easily manipulated to only output a semantic vector for each word.

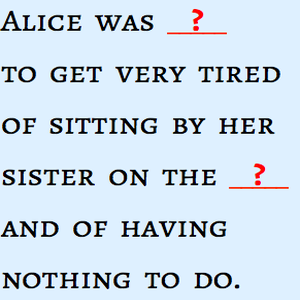

Visualize the output of the net on a text (one 1024-dimensional vector for each word).

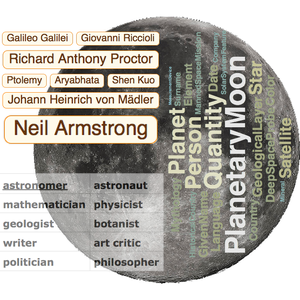

Plot a 2D representation of the semantic representation of the word "Apple" in different contexts.

Now visualize word semantics using colors.

Implement a 3D dimension reduction from the ELMo representation.

Build a net that outputs RGB colors for each word of a text.

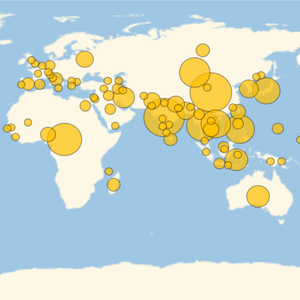

Visualize the semantic colors of words in context.